This log will focus on open methods for solving non-linear equations, including fixed-point iteration, Newton-Raphson method, Bairstow’s method, Quotient Difference algorithm and Muller’s method.

Slides reference: Prof. Sun, Peng – Non-Linear Equation Solvers

Textbook reference: Numerical Methods for Engineers SEVENTH EDITION

Open? Close?

The “close” methods are actually called bracketing methods. For these methods, we start with an interval that brackets the root. Then, we utilize properties of the target function to narrow down the interval iteratively. Typical examples of bracketing methods include the bisection method and the false position method.

Open methods, one the other hand, starts the iteration with a single starting value of x or two starting values that do not “bracket” the root. Because of this, they can sometimes diverge from the true root. However, when they converge, they usually do so much more quickly than bracketing methods.

General functions

Simple fixed-point iteration

Description

For a function ![]() , we rearrange the function in the form of

, we rearrange the function in the form of ![]() such that

such that ![]() .

.

In the case of ![]() , there are 3 different ways to rearrange them:

, there are 3 different ways to rearrange them:

Algorithm

Given a initial guess of the root ![]() , the guess is updated iteratively:

, the guess is updated iteratively: ![]() .

.

We also need a indicator to evaluate the convergence and to decide when to stop the iteration. The following error indicator serves this purpose:

![]()

Convergence

One way to check whether the algorithm will yield convergent results is the two-curve graphical method.

For the function ![]() , we rearrange into the form of

, we rearrange into the form of ![]() . If we plot

. If we plot ![]() and

and ![]() respectively, then the intersection of the two curves represents the root we are looking for. The graph allows us the visualize the iterations as follows (It is easy to see that the algorithm converges!):

respectively, then the intersection of the two curves represents the root we are looking for. The graph allows us the visualize the iterations as follows (It is easy to see that the algorithm converges!):

From this graphical perspective, we can also derive the sufficient condition for convergence is ![]() . The following is the proof:

. The following is the proof:

Note that ![]() is the error for step

is the error for step ![]() and

and ![]() is the error for step

is the error for step ![]() . If we want the algorithm to converge, we want the error at step

. If we want the algorithm to converge, we want the error at step ![]() smaller than the error at step

smaller than the error at step ![]() . Hence, a sufficient condition for convergence is

. Hence, a sufficient condition for convergence is ![]() .

.

An offshoot of the analysis is that it also demonstrates that when

the method converges, the error is roughly proportional to and less

than the error of the previous step. For this reason, simple fixed-point

iteration is said to be linearly convergent. (Taken from textbook)

Aitken acceleration

Aitken acceleration will improve the convergence speed from linear to quadratic in ideal condition (which satisfies the ”geometric series’ like assumption).

We define:

Then the acceleration scheme becomes:

Newton-Raphson method

In fixed-point iteration, the only property from the target function is that local values of ![]() given a certain

given a certain ![]() . Therefore, another direction to improve the convergence speed is trying to utilize more properties from the target function

. Therefore, another direction to improve the convergence speed is trying to utilize more properties from the target function ![]() . This is exactly what we do in the Newton-Raphson method,

. This is exactly what we do in the Newton-Raphson method,

In the Newton-Raphson method, we use the information of derivatives. The following graph gives a good geometric intuition.

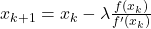

Update rule

The first derivative at ![]() is equivalent to the slope:

is equivalent to the slope:

![]()

which can rearranged to yield:

![]()

Taylor series

Pitfalls

Just as every other open method, the Newton-Raphson method come with no guarantee of convergence. This diagram depicts the four common pitfalls that would lead to slow convergence/divergence of the method.

- (a): Divergence: Inflection point in the vicinity of the root, i.e.,

- (b): Root-jumping: Near-zero slope encounter

- (c): Oscillations: Iterations can oscillate around a local minima or maxim

- (d): Division-by-0: Zero slope

To deal with these potential pitfalls, we can:

- Test with multiple initial values

- Adopt a hyperparameter

to scale the step (the “learning rate”):

to scale the step (the “learning rate”):

- And always remember to set the max iteration!

Secant method

The secant method is useful for the cases where the first-order derivatives cannot be computed analytically – a requirement for the Newton-Raphson method.

Update rule

Start with two values: ![]() and

and ![]()

You may find that the secant method is not that different from the Newton-Raphson method. Basically, it adopts additional estimation for the first order derivative.

Polynomials

To be honest, this section is considerably more complex but not complicated. I really don’t have energy to cover everything and I don’t find it fit to include too much details in the log. So please refer to the slides attached on this section.

Bairstow’s method (for complex roots)

Quotient-Difference Algorithm

Quadratic Interpolation

Muller’s method

A quadratic interpolation method – using parabola to fit the curve (Compare with Newton-Raphson and false position – these are linear interpolation methods. The ideas are actually similiar.)

发表回复